Forecast Measures

General

Forecast accuracy is a criterion for evaluating how suitable a particular forecasting method might be for a particular data set. Forecast accuracy is the main reason to select one forecast model over another. Forecast accuracy is also the reason to tune the parameters for a given model. Forecast accuracy refers to how well a current forecasting model is able to reproduce the data that is already known.

Six measures of forecasting accuracy are defined and quantified: MAE, ME, MAPE, PVE, Tracking signal, and Theil's U-statistic. In addition to these R Bar Squared is also available when running one of the regression models. Forecast models can be evaluated based on the value of one or more of these measures.

Notation

|

n |

Number of observations. |

|

Dt |

Observed demand in period t. |

|

Ft |

Forecast in period t. |

|

D |

Delta (tracking signal smoothing parameter). |

|

et |

= Dt.- Ft |

The available measures are:

- MAE

- Value of MAE

- ME

- RME

- MAPE

- WMAPE

- MSE

- Variance demand

- PVE

- Tracking Signal

- Adjustment Factor

- Performance Factor

- R Squared

- R Bar Squared

- Service Level

- Fill Rate Service

MAE

MAE is an abbreviation for mean absolute error. The formula is as follows:

![]()

This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

Value of MAE

The value of MAE is MAE multiplied by the inventory value for the item. Forecasted items with the highest value of MAE are the most profitable candidates for better accuracy.

ME

ME is an abbreviation for mean error. The formula is as follows:

![]()

ME criteria are likely to be limited since positive and negative errors tend to offset one another. In fact, the ME will only tell if there is systematic under- or over-forecasting, also called the forecast bias. This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

RME

RME is an abbreviation for relative mean error. The formula is as follows:

RME criteria are likely to be limited since positive and negative errors tend to offset one another. In fact, the RME will only tell if there is systematic under- or over-forecasting, also called the forecast bias. This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data). The part with the higest RME is the part that has the higest forecast bias.

Bias in the forecast is not good since this indicates that you are constant to optimistic (over forecasting negative RME) or pesimistic (underforecasting, poistive RME)

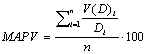

MAPE

MAPE is an abbreviation for mean absolute percentage error, The formula is as follows:

MAPE expresses the relative inaccuracy in the forecast for each item. Items with the highest MAPE should benefit from increased forecast accuracy. This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

WMAPE

WMAPE is an abbreviation for weighted mean absolute percentage error, The formula is as follows:

WMAPE expresses the relative inaccuracy in the forecast for each item. Where periods with large abs errors are given more weight than periods with small abs errors. This does not allow periods that have small abs errors but huge percentage errors do dominate the measurement. Items with the highest WMAPE should benefit from increased forecast accuracy. This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

MSE

MSE is an abbreviation for mean squared error, The formula is as follows:

MSE expresses the squared inaccuracy in the forecast for each item. Items with the highest MSE should benefit from increased forecast accuracy. MSE has the property that parts with single large forecast errors gets more penalized than a part that has a equal forecast error but with the errors more evenly distributed over the measurement period. This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

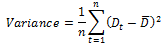

Variance in demand

This is the variance from the average in the demand vector, The formula is as follows:

![]()

The Variance expresses the varaition in the demand pattern of the part. Items with the high demand variance is usually difficult to forecast and might need extra attention. This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

PVE

PVE is an abbreviation for percentage variation explained. The formulas are as follows:

![]()

![]()

PVE indicates what portion of the inherent variation in the demand being explained by the forecast model. PVE shows if the forecast is getting better or worse over time since it adjusts for changes in demand volatility. It is also a good benchmarking measure. If PVE is less than zero the forecast is actually increasing the variation of the demand. In this case, change the forecast model used or adjust the parameters (increase alpha, or number of moving average periods).

This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

Tracking signal

The tracking signal is a means of monitoring the bias and reacting to changes in the demand pattern. A high tracking signal (i.e., > 0.6) suggests that there is a systematic error or bias in the forecast. The lower the delta value the less risk for false alarms (the alarm goes off later than with a high delta). A delta value between 0.1 and 0.3 is recommended. The formulas are as follows:

![]()

![]()

![]()

This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

Adjustment Factor

Adjustment Factor, The formula is as follows:

![]()

![]()

Adjustment factor expresses difference between the users adjusted forecast (historical forecast) and a pure mathematical forecast (explanation forecast), an adjustment factor of 10 means the user adjusted forecast i 10% more accurate than the pure mathematical forecast, -10 means that the pure mathematical one are better. Items with the high negative adjustment factor should benefit from a pure mathematical forecast (hands off). This formula is run from the latest historical period, and the number of periods specified in the forecast error periods back in time (n=forecast error periods). (See Enter General Server Data)

Performance Factor

Performance Factor, the formula is as follows:

Where, Variance is the variation of the demand pattern of the part. Items

with high demand variance require extra attention as they are difficult to

forecast.

MSE is an abbreviation for mean squared error and it expresses the square of the

inaccuracy in the forecast for each item. Items with the highest MSE benefit

from increased forecast accuracy.

Items with a high Performance Factor need to be handled attentively. Comparative

to the demand variance, forecasted accuracy is low for such items. A performance

factor above 1 means that the actual demand deviation from forecast is higher

than the demand fluctuations from its average. This formula is run from the

latest historical period, and n is the number of periods specified in the

forecast error periods back in time (n=forecast error periods). (See

Enter General Server Data.)

R Squared

Also called R2. This measure is only used when the forecast model regression (least squares) or multiple regression is used. R Squared has a useful interpretation as the proportion of variance in the historical demand, explained (accounted for) by the regression (when least square model is used) or the selected explanation variables (when multiple regression is used).

Notation:

|

Yi |

Regression estimation of the Demand variable. |

|

Di |

The Demand variable. |

![]()

![]()

Service Level

Service Level denotes the percentage of days within the Forecast Error Periods that the product had been available for the customer. A day is treated as sold out when there are no sales reported for that day and quantity on stock is 0 at the end of the day. The Service Level measurement is computed as follows:

![]()

Fill Rate Service

Fill Rate Service denotes the percentage of customers’ demanded quantity satisfied by the entity within the Forecast Error Periods. This will be a ratio between QTY Delivered & Total QTY Demanded by customers, where user is able to get an idea about percentage of sales won due to product availability for the customers. The Fill Rate Service measurement is computed as follows.

![]()

![]()

The Qty Lost is the demand expected in sold out days. A day is treated as sold out when there are no sales recorded on that day and quantity on stock/hand is 0 at the end of the day. The Qty Lost amount of the sold out days can be calculated using System Forecast or Adjusted Forecast. There is a Advance Server setting, where user has the ability of selecting the most preferred option for the sold out days compensation (Qty Lost) calculation out of the above two options available. System Forecast will be the default method for sold out day compensation calculation.