| Foundation1 / Administration Aurena / Br And A / Information Sources / Materialized Views / Handling Incremental Load / |

Use this page to learn more about the Data Mart source type Incremental, i.e. where a snapshot table is incrementally updated depending on changes in referenced tables. The incremental load means that only new and modified records will be transferred.

Incremental loading makes it possible to schedule a refresh task that runs rather frequently, thus keeping the target tables up-to-date, not instantly but on a regular basis.

In the target table most of the attributes are calculated and ready to use, meaning that no costly calculations have to be performed once the incremental refresh is ready. This Data Mart source is equal to Materialized Views in this respect, i.e. the target table contains calculated/evaluated values. The difference is that a Materialized View only supports full/complete refresh while the incremental target table is updated with small portions of data.

More information about incremental load can be found here:

Note: The Data Mart specific features and functionality handles all types of Data Mart sources. The incremental framework is however purpose built to handle incremental sources only.

Note: The incremental framework is as accurate as it is possible to describe dependences to referenced tables. Still there can be cases that can not be handled!

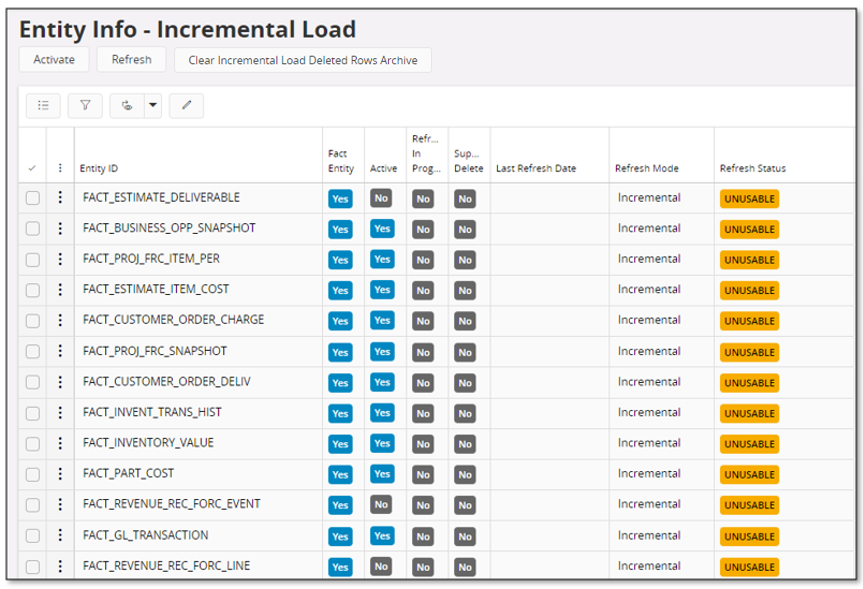

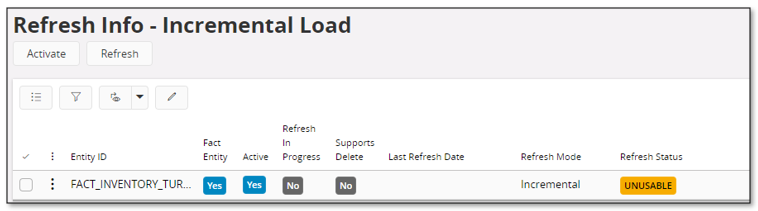

The page Entity Info - Incremental Load can be use to view entities, facts and/or dimensions, that support incremental load.

This overview page contains the following:

| Column | Description |

|---|---|

| Entity ID | Identity of dimension or fact supporting incremental load |

| Fact Entity | Defines if entity is a fact |

| Active | Defines if the incremental entity is marked as active |

| Refresh In Progress | Defines if refresh is ongoing |

| Supports Delete | Defines if the entity supports delete, i.e. if it is possible to also remove transactions from the incremental table that are no longer available in the referenced source tables. |

| Last Refresh Date | Timestamp for last refresh |

| Refresh Mode | Can heave on of the following values:

|

| Refresh Status | Status

|

Navigation can be made to the detail page Entity Info - Incremental Load Detail.

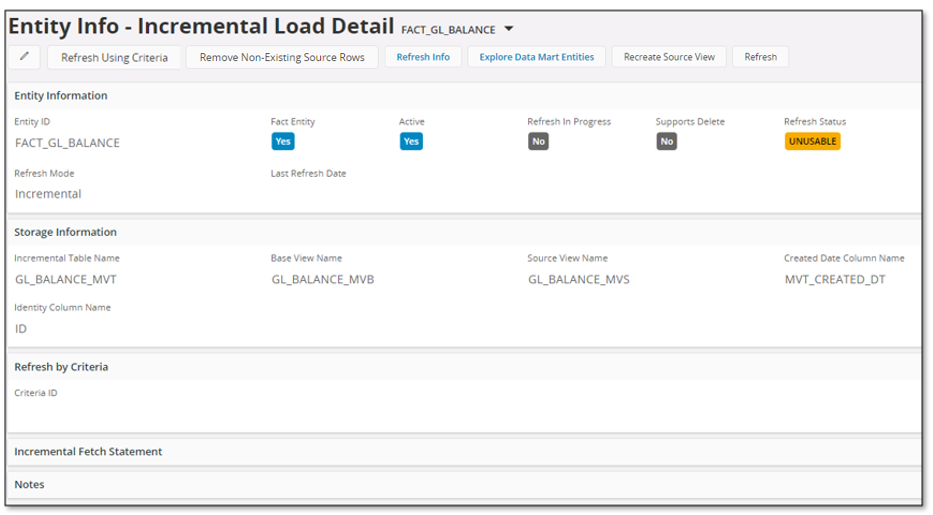

The detail page has the following information:

| Column | Description |

|---|---|

| Entity ID | Identity of dimension or fact supporting incremental load |

| Fact Entity | Defines if entity is a fact |

| Active | Defines if the incremental entity is marked as active |

| Refresh In Progress | Defines if refresh is ongoing |

| Supports Delete | Defines if the entity supports delete, i.e. if it is possible to also remove transactions from the incremental table that are no longer available in the referenced source tables. |

| Refresh Status | Status

|

| Refresh Mode | Can heave on of the following values:

|

| Last Refresh Date | Timestamp for last refresh |

| Storage Information | |

| Incremental Table Name | Name of the target snapshot table that is incrementally updated |

| Base View Name | Name of base view that defines how to fetch attributes for a given entity |

| Source View Name | Name of the source view that as used when fetching data from the base view to the incremental table. |

| Created Date Column Name | Name of the column that stores the created date (timestamp) for each transaction in the incremental table |

| Identity Column Name | Name of the column in the incremental table that represents a unique key |

| Refresh by Criteria | |

| Criteria ID | Identity if a criteria definition that can be used to filter out transactions to be transferred to the incremental table |

| Incremental Fetch Statement | |

| The statement used by the framework when doing the fetch of new/modified records to the incremental table | |

| Notes | |

| Contains specific information about the entity (if at all) | |

The entity pages support the following commands:

Performs the first refresh, creating the first snapshot, and marks the entity as active.

Only available if Refresh Status is UNUSABLE

Performs refresh of the entity according to the Refresh Mode

Opens up an assistant that can be used to remove archived information about deleted rows in the incremental table (MVT).

Supports refresh based on a criteria, i.e. a set of conditions will be used and applied on the Base View to fresh the incremental table.

A typical use case is when it is believed that some transactions in the incremental table are not 100% accurate. The it is possible to define a criteria that e.g. leads to fetch of all transactions created this year. After this the ordinary incremental load can run.

If the entity supports delete of records then the option Remove Non-Existing Source Rows will be available. In most cases the removal of non-existing source rows from the incremental load table is handled automatically by the incremental framework. This option can be used if there is a need to remove non-existing source rows before the a scheduled refresh is executed.

If the refresh fails, e.g. due to that the session for some reason is killed, an entity might still be considered as being in progress. This is visualized in the page Entity Info - Incremental Load by field Refresh In Progress. If it is clear that the refresh is not running, the Reset Refresh Status command can be used to reset the refresh status to make it possible to run a new refresh.

Opens the Refresh Info - Incremental Load page that lists all special tracking Materialized Views (MVs) used to track changes in referenced source tables.

Navigates to the Explore By Information Source page and shows all Data Mart sources referenced by the current entity

Recreates the source view if it is expected that it is not currently correct. Do not use this command unnecessarily.

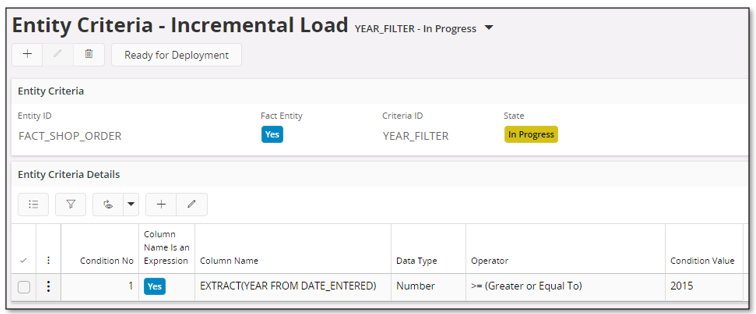

The page Entity Criteria - Incremental Load Detail supports definition of filter conditions, associated to a specific criteria identity, that can be used to filter transactions to be transferred to the incremental table.

A criteria can be used in two ways:

This means that the source view will be modified to also consider the conditions defined for the specified criteria.

This possibility is available as a command Refresh Using Criteria in either the Entity Info - Incremental Load page or the Entity Info - Incremental Load Detail page. The main purpose is to perform a sub-set refresh, where only a smaller part of all transactions are transferred to the incremental table.

Note: It is not possible to modify a criteria definition unless the state has been modified to In Progress

| Column | Description |

|---|---|

| Entity ID | Identity of dimension or fact supporting incremental load |

| Fact Entity | Defines if entity is a fact |

| Criteria ID | Identity of the criteria |

| State | Current state

|

| --- Details --- | |

| Condition No | Sequence number of defined conditions |

| Column Name Is an Expression | Selected if the Column Name contains an

expression and not a table column name. One example is EXTRACT(YEAR FROM DATE_ENTERED) This is an expression based on the table column DATE_ENTERED |

| Column Name | Name of table column that the condition applies to |

| Data Type | Data type, one of Number, String, Date |

| Operator | Operator used for the condition |

| Condition Value | Value to be used for the condition |

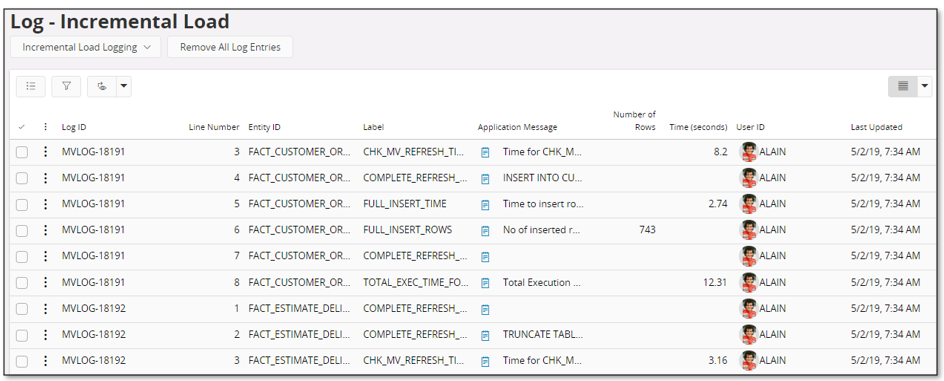

The page Log - Incremental Load logs all steps performed during incremental load.

| Column | Description |

|---|---|

| Log ID | Identity. All steps during incremental of one entity gets the same log identity |

| Line Number | Log step number |

| Entity ID | Identity of entity that the log applies to |

| Label | Log label |

| Application Message | Information |

| Number of Rows | Log message for current label |

| Time (seconds) | Time used in seconds, typically for INSERT, DELETE and CREATE operations |

| User Sign | Identity of user performing the incremental load |

| Last Updated | Timestamp when the log step occurred |

The log can be used to investigate what happens during incremental load.

Note: By default the log is disabled. To enable it, either open the Business Reporter & Analysis - System Parameters page and set the value for parameter Log incremental load actions to TRUE or use the global command Enable under Incremental Load Logging.

The following command are available:

This option clears the log completely. Available on global level.

This option can be used to remove all log lines related to one specific log identity.

There is a scheduled task that cleans up the log once a week.

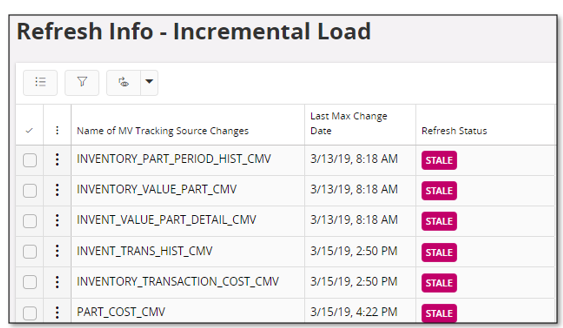

The page Refresh Info - Incremental Load lists all Materialized Views used to track changes in referenced source tables.

| Column | Description |

|---|---|

| Name of MV Tracking Source Changes | Identity if dimension or fact supporting incremental load |

| Last Max Change Date | Timestamp for last refresh |

| Refresh Status | Status of the Materialized View

|

The page support the following commands

Opens the Entity Info - Incremental Load page populated with the entities that are using one specific Tracking MV

During the incremental processing, the last step is to remove non-existing rows in the incremental table (MVT) that no longer exists in the core tables. This is only done for entities that support delete, i.e. Fact/Dimension entities that refer to core entities where rows might get removed as part of ordinary functionality.

When the incremental framework deletes rows the following happens:

The deleted rows archive table is mainly there to support the Analysis Models i.e., it provides a way to transfer information about deleted core rows to SQL Server to ensure that the DW can be kept in synch with core.

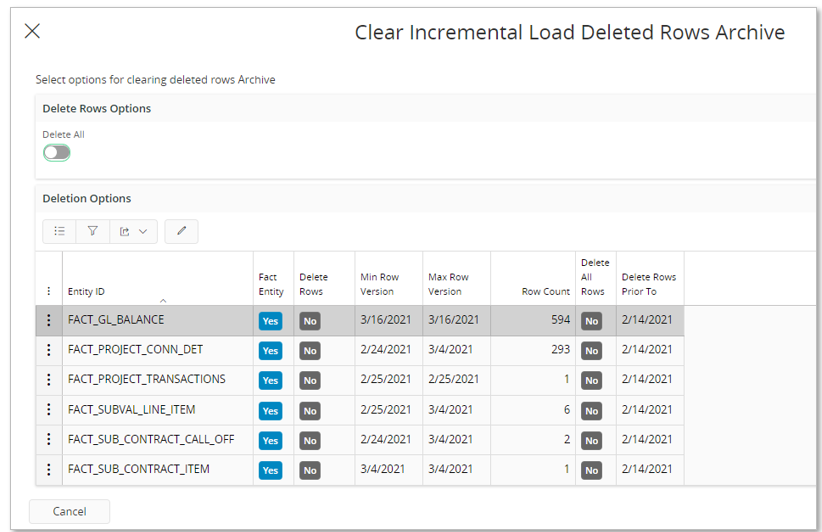

If core transactions are deleted but never replaced, the removed identities will be kept in the archive table. The only way to clean up the stored information is to use the Clear Incremental Load Deleted Rows Archive assistant.

Use the assistant if it has been made sure that archive identities have been considered in the Analysis Models related DW.

The assistant provides two delete options:

To use the first option, enable editing in the Deletion Options group for each entity for which archived rows should be removed:

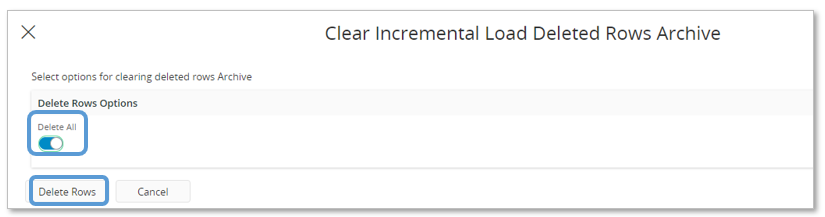

To use the second option, toggle Delete All and then click on Delete Rows.

Define the facts in a special Refresh Category and schedule the refresh of the category.

Important to use incremental load in this case to enhance faster load and refresh of the Data Warehouse

This means that refresh of dimensions does now have to be considered at all in this case.

Define the facts in a special Refresh Category and schedule the refresh of the category

The page can also be used to perform a so called sub-set load, where an entity criteria definition is supplied with the purpose to load a sub set of all transactions, mainly due to that the incremental framework might not be able to track all changes of referenced tables. The sub-set load then gives the possibility to update all transactions meeting the defined conditions, e.g. all created current year, all created the current month etc

One example can be in

Financials where it is of little interest to look at Balances more then a few

years back. In this case a condition like ACCOUNTING_YEAR>2011

makes sure that only transactions older or equal to 2012 are transferred.

To get started with incremental loading requires that a first refresh is done, with the purpose to create a first snapshot. If Refresh Mode is Full then all data will be transferred for each refresh. An attached criteria definition will be considered and the purpose to use one would be to limit the amount of data transferred. This option is a non-incremental option and should only be used if there are some flaws with the incremental loading.

The standard Refresh Mode is Incremental. When the first snapshot is created, using the Activate command, then all data will be transferred to the target table. If a criteria definition is attached to the entity then it will be considered during the initial load but also for all subsequent refresh events. As soon as the first snapshot has been successfully created, then subsequent loads will be handled incrementally.

If the entity represents a large current data volume, the initial load can take a long time. For sources with large volumes it is recommended to do the initial load in steps, a sub-set load. The following can serve as a guideline:

ACCOUNTING_YEAR_KEY, the operator is "=" and the first conditional value is

2016. Make sure that the criteria is in state Ready

.