Working with Test Data¶

Related Pages¶

Overview¶

With the introduction of short lived environments in the Lifecycle Experience solution, data that is manually entered into an environment would be lost once it's deleted. Therefore preserving test data is done using scripted test data files that are deployable in any compatible environment, which allows the end user to quickly setup the initial test data that is needed. This document describes how a user would utilize the IFS Test-A-Rest tool to create and deploy test data scripts in an LE environment.

IFS Test-A-Rest¶

This tool can be used to test IFS OData RESTFul APIs and for loading test-data using simple script files.

Important capabilities:

- Create, modify and read data from IFS OData APIs

- Use parameters in both URLs and body payloads

- Evaluate expressions using a c# syntax

- Call other scripts to divide larger tests into smaller units

- Support for authentication

Tool is based on.NET Core 3.1 and can be run on both Windows and Linux.

Getting Started¶

To install the pre-built binaries, follow the steps below;

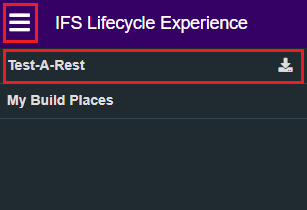

- Get the IFS Test-A-Rest binaries and samples from lifecycle.ifs.com. To do this, navigate to Lifecycle Experience portal, open the navigator and select the "Test-A-Rest" option. This will download the latest version of the Test-A-Rest tool. Next, unpack the zip file to a suitable location.

- Run the _install.cmd file to register a Test-A-Rest context menu (this step is optional)

- Edit the _test_a_rest.cmd file to use the server and credentials if you prefer any other server than default.

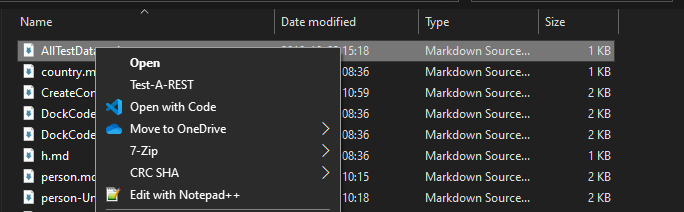

- Try a sample using the Test-A-REST Context menu. Right click on the test file and select "Test-A-Rest".

Basic Usage¶

Create a simple test data script as follows;

DockCode.md:

# Markdown content to describe what script does

```cs

Create DockCodesHandling.svc/PurchaseDockCodes

{

"Contract": "1",

"DockCode": "DockA",

"Description": "Main Gate"

}

Script must be enclosed within the triple block quotes.

Executing Test-A-Rest¶

You can run the tool either by using the Test-A-REST context menu or from command line using the argument given below;

TestARest.exe ServerUrl=https://xtendlkp-dep-rnd.ifsworld.com Username=ifsapp Password=ifsapp FileToRead=json1.md ResultFilePath=C:\\TAR_logs

In order to authenticate providing a server url, username and password is sufficient. Edit the _test_a_rest.cmd file setting with the credentials you want. It is also possible to provide an external token for access using the Token argument. If a Token argument is passed, Test-A-Rest will not attempt to perform any logon procedures.

Test-A-Rest has a configuration file named appsettings.config where the default settings can be defined in the following way:

{

"LogLevel": "Normal",

"ServerUrl": "https://someserver:48080",

"Username": "ifsapp",

"Password": "ifsapp",

"Token": "",

"client_secret": "",

"FileToRead": "someFile.md",

"ResultFilePath": "c:\\results",

"AdditionalUsers": {

"alain": {"Username": "alain", "Password": "alain"},

"User2": {"Username": "MFG2", "Password": "mfg2"},

"User3": {"Username": "MFG3", "Password": "mfg3"}

}

}

This makes it possible to run Test-A-Rest without any command line arguments. If command line arguments are given, they take precedence over the settings in the configuration file.

The appsettings.config file should be placed in the folder where Test-A-Rest is installed.

Test-A-Rest can also be run without a file input, execute in interactive mode, very similar to Oracle SQL*Plus.

Start TestARest without FileToRead parameter to enable interactive mode.

TestARest.exe ServerUrl=https://xtendlkp-dep-rnd.ifsworld.com Username=ifsapp Password=ifsapp

!!! attention "Note"

You can always run the available test data scripts again using the TAR executable as described above. However, re-running the same scripts later without modifications to the key values would report errors for the entries that already exists.

Log Level¶

It's possible to control how much output Test-A-Rest should produce using the LogLevel parameter.

These are the supported log levels:

- Normal (default)

- Information

- Diag

- Debug

Here is how you set the log level to Debug:

TestARest.exe ServerUrl=https://xtendlkp-dep-rnd.ifsworld.com Username=ifsapp Password=ifsapp FileToRead=MyTest.md ResultFilePath=C:\\TAR_logs LogLevel=Debug

Test Script Examples¶

The following files are available inside the test-a-rest.zip folder available on lifecycle.ifs.com.

The file below can be used to create a person and country test data;

- AllTestData.md

The files listed below can be used to create a person and country data separately;

- person.md

- country.md

The file below can be used to create a purchasing dock code (with child records) - using parameters. This file is re-runnable to create new data due to the use of "Random()" function

- DockCodes.md

A sample output of a test script is shown below;

IFS Test-a-Rest v 0.3 build 2019-10-31

======================================

2019-10-31 13:23:59 Start

2019-10-31 13:23:59 2WoRLZV7HT

2019-10-31 13:24:00 Login OK: IFSSESSIONID48080=5FQhxcVAATxXQxuSxBF2REe_hjmXVH6IJAUZXRLeV6j7TR9XPaz_!-200659304; _WL_AUTHCOOKIE_IFSSESSIONID48080=2Nqll4..IXqn0DQcyKCy

Number of Code blocks found in file: 1

2019-10-31 13:24:00 Command: Post DockCodesHandling.svc/PurchaseDockCodes

2019-10-31 13:24:00 Request body:

{

"Contract": "1",

"DockCode": "DockA",

"Description": "Main Gate"

}

2019-10-31 13:24:00 Result (201) Created

2019-10-31 13:24:00 Response

{"@odata.context":"https://lkppde1697.corpnet.ifsworld.com:48080/main/ifsapplications/projection/v1/DockCodesHandling.svc/$metadata#PurchaseDockCodes/$entity","@odata.etag":"W/\"Vy8iQUFDSU1HQUFGQUFCcElIQUFBOjIwMTkxMDMxMTMyNDAwIg==\"","luname":"PurchaseDockCode","keyref":"CONTRACT=1^DOCK_CODE=DockA^","Objgrants":null,"Contract":"1","DockCode":"DockA","Description":"Main Gate"}

2019-10-31 13:24:00 Timings time to first response: 165 ms. Time to response complete 165 ms.

2019-10-31 13:24:00 End

Setting up baseline Test Data in environments¶

When a developer requests a LE environment, if the environment is of type DEV, QAS, TOPIC, BAS or DEL, the environment will be made available with pre-loaded test data. This data is setup using the existing TAR test data scripts made available by R&D. The test data for the following products will available;

Note

Initially some products will have more test data coverage than others.

- Aviation and Defense

- Asset Management

- Financials

- Human Capital Management

- Industries

- Manufacturing

- Projects

- Service Management

- Supply Chain

The test data deployment will automatically start as a part of the environment creation request.

Test Data Loading Logs¶

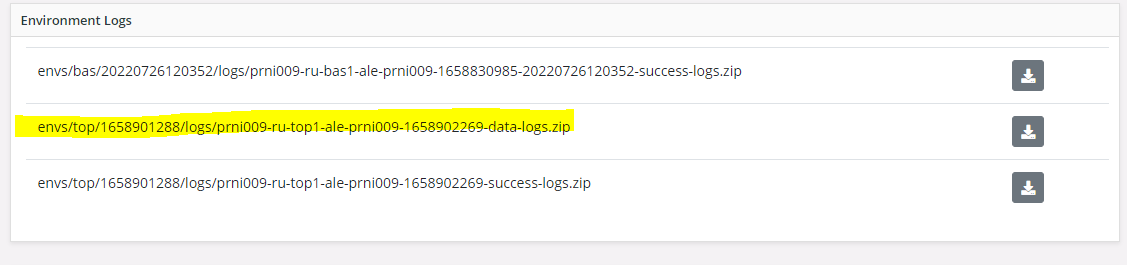

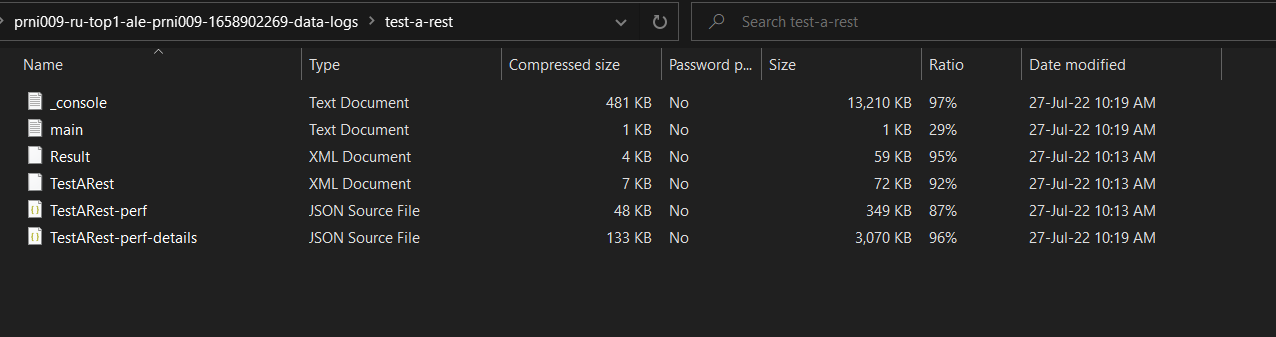

Once the test data deployment has completed, the logs from the operation (test-a-rest logs) are saved as separate zip files and will be accessible via the logs page of the build place or the Release Update studio.

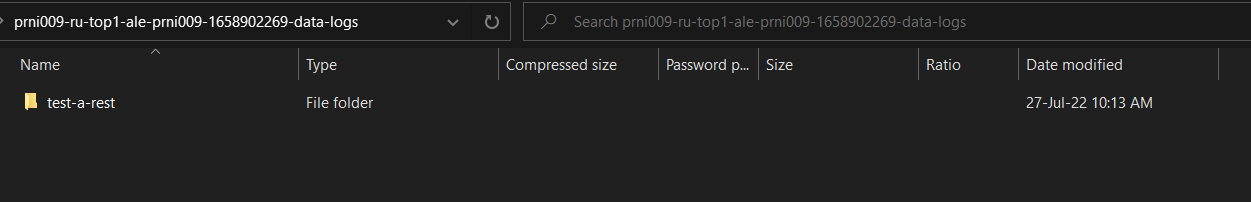

Internal structure of the log file

Files containing inside the test-a-rest folder

Creating and deploying custom Test Data in environments¶

In addition to the scripts made available by R&D, you can create your own test data scripts that will be of use when testing certain customizations you have done in the project. The creation of these test data scripts can be done in the same way as the rest of the test data, but these scripts will be stored outside the R&D structure for ease of maintenance.

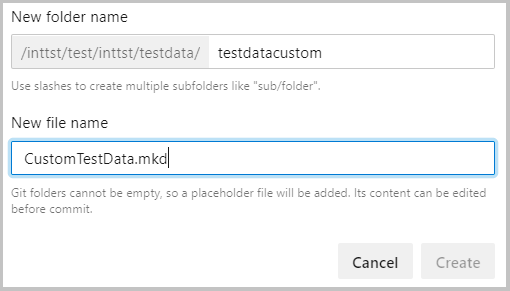

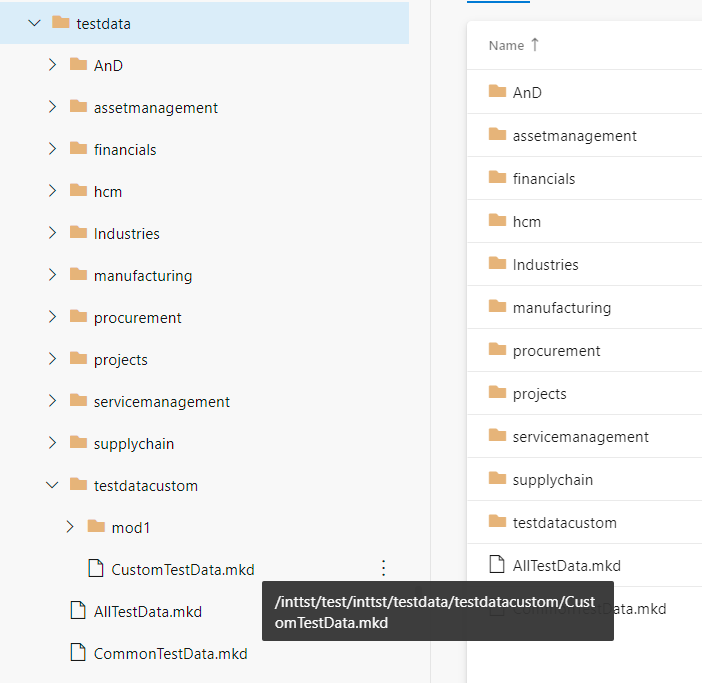

The test data developed for customizations will be stored inside the folder testdatacustom. At the start of the project, this folder will either be empty or not created. In such cases, you will need to add the testdatacustom folder inside the test data folder in INTTST component (inttst/testdata/testdatacustom).

Your custom test data scripts will be called using the master script named CustomTestData.mkd which resides inside the testdata/testdatacustom folder.

You may store your test data scripts in a preferred folder structure inside the testdatacustom folder, but the calling order and their paths relative to the folder needs to be correctly specified in the CustomTestData.mkd (if this file doesn't exist, create it). The reason for this is, once you've added custom test data scripts, a new environment request based on the repository that contains the custom data scripts will automatically call the CustomTestData.mkd and deploy the custom test data scripts you have added after deploying the standard R&D test data.

Should it be necessary to modify any of the scripts that is provided by R&D, the process is the same as modifying any other source file in the application. You can alter the R&D provided scripts to create data that is better suited for the scenario you are testing or to align with modifications made to the application. Any conflicts arising from future updates will be resolved following the same process as the rest of the source code.

Note

It is advisable to first try to cater to such needs by creating a new script and placing it under the "testdatacustom" structure, separate from the core R&D test data scripts for ease of maintenance.

Troubleshooting¶

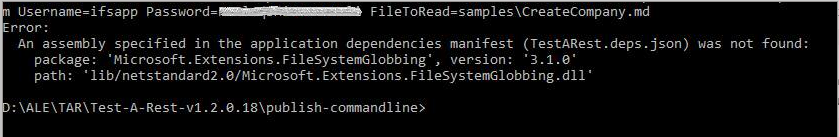

Test Script Execution Failure¶

In some instances your test scripts might fail with an error similar to what is shown below;

In the case of this error occurring, clearing the content of the folder C:\Users<USERNAME>\AppData\Local\Temp.net<APPNAME>... should resolve the issue.

Test Data Loading Failure¶

If the test data loading operation fails, logs will be created and can be accessed via the Build Place Logs page (Refer Test Data Loading Logs for more details). The logs can be referred for troubleshooting purposes. Once the failed test data scripts are identified, one of the two options should be performed:

- Manually deploy the test data, following the instructions given here.

- Delete the existing environment and order another environment.

Note

In an event of test data loading failure, the environment may have been created with partial data or no data at all depending on the stage at which the failure occurs. However, the environment will still be useful to perform other operations.